Database Management Manual

- Overview

- User Management

- Creating new users

- Postgres

- Manually creating a new user

- SQL Server

- Manually creating a new user

- Changing passwords

- Postgres

- SQL Server

- Proxy accounts

- Postgres

- SQL Server

- Granting permission

- Postgres

- SQL Server

- Viewing your user setup

- Postgres

- SQL Server

- Data Management

- Creating new schemas

- Postgres

- SQL Server

- Tablespaces

- Postgres

- SQL Server

- Partitioning

- Postgres

- SQL Server

- Granting permission

- Postgres

- SQL Server

- Remote Database Access

- Postgres with a remote Oracle database

- SQL Server with a remote Oracle database

- Postgres with a remote SQL Server database

- SQL Server with a remote Postgres database

- Information Governance

- Auditing

- Postgres

- SQL Server

- Backup and recovery

- Postgres

- Logical Backups

- Continuous Archiving and Point-in-Time Recovery

- SQL Server

- Logical Backups

- Continuous Archiving and Point-in-Time Recovery

- Patching

- Postgres

- SQL Server

- Tuning

- Postgres

- Server Memory Tuning

- Query Tuning

- Database Space Management

- SQL Server

- Server Memory Tuning

- Query Tuning

- Database Space Management

Overview

This manual details how to manage RIF databases. See also the:

User Management

Creating new users

New users must be created in lower case, start with a letter, and only contain the characters: [a-z][0-9]_. Do not use mixed case, upper case, dashes, space or non

ASCII e.g. UTF8) characters. Beware; the database stores user names internally in upper case. This is because of the RIF’s Oracle heritage.

Postgres

Run the optional script rif40_production_user.sql. This creates a default user %newuser% with password %newpw% in database %newdb% from the command environment.

This is set from the command line using the -v newuser= -v newpw= and -v newdb= parameters. Run as a normal user or an

Administrator, using the *postgres* account:

psql -U postgres -d postgres -w -e -f rif40_production_user.sql -v newuser=kevin -v newpw=nivek -v newdb=sahsuland

- User is created with the rif_user (can create tables and views) and rif_manager roles (can also create procedures and functions);

- User can use the sahsuland database;

- Will fail to re-create a user if the user already has objects (tables, views etc);

Test connection and object privileges, access to RIF numerators and denominators:

C:\Users\phamb\Documents\GitHub\rapidInquiryFacility>psql -U kevin

Password for user kevin:

You are connected to database "sahsuland" as user "kevin" on host "localhost" at port "5432".

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: rif40_log_setup() DEFAULTED send DEBUG to INFO: off; debug function list: []

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00000.03s rif40_startup(): SQL> SET search_path TO kevin,rif40, public, topology, gis, pop, rif_data, data_load, rif40_sql_pkg, rif_studies, rif40_partitions,rif_studies;

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00000.06s rif40_startup(): SQL> DROP FUNCTION IF EXISTS kevin.rif40_run_study(INTEGER, INTEGER);

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: NOTICE: function kevin.rif40_run_study(pg_catalog.int4,pg_catalog.int4) does not exist, skipping

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00000.14s rif40_startup(): Created temporary table: g_rif40_study_areas

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00000.16s rif40_startup(): Created temporary table: g_rif40_comparison_areas

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00001.00s rif40_startup(): PostGIS extension V2.3.5 (POSTGIS="2.3.5 r16110" GEOS="3.6.2-CAPI-1.10.2 4d2925d" PROJ="Rel. 4.9.3, 15 August 2016" GDAL="GDAL 2.2.2, released 2017/09/15" LIBXML="2.7.8" LIBJSON="0.12" RASTER)

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00001.02s rif40_startup(): FDW functionality disabled - FDWServerName, FDWServerType, FDWDBServer RIF parameters not set.

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00001.03s rif40_startup(): V$Revision: 1.11 $ rif40_geographies, rif40_tables, rif40_health_study_themes exist for user: kevin

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00001.03s rif40_startup(): VIEW rif40_user_version not found; rebuild forced

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00001.03s rif40_startup(): search_path: rif40, public, topology, gis, pop, rif_data, data_load, rif40_sql_pkg, rif_studies, rif40_partitions, rif_studies, reset: rif40, public, topology, gis, pop, rif_data, data_load, rif40_sql_pkg, rif_studies, rif40_partitions

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: analyzing "kevin.t_rif40_num_denom"

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: "t_rif40_num_denom": scanned 0 of 0 pages, containing 0 live rows and 0 dead rows; 0 rows in sample, 0 estimated total rows

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00001.15s rif40_startup(): Created table: t_rif40_num_denom

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00001.29s rif40_startup(): Created view: rif40_num_denom_errors

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00001.34s rif40_startup(): Created view: rif40_num_denom

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00001.34s rif40_startup(): V$Revision: 1.11 $ Creating view: rif40_user_version

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00001.36s rif40_startup(): Deleted 0, created 6 tables/views/foreign data wrapper tables

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: SQL> SET search_path TO kevin,rif40, public, topology, gis, pop, rif_data, data_load, rif40_sql_pkg, rif_studies, rif40_partitions;

DO

psql (9.6.8)

Type "help" for help.

sahsuland=> SELECT current_database() AS db_name INTO test_table;

SELECT 1

sahsuland=> SELECT * FROM test_table;

db_name

-----------

sahsuland

(1 row)

sahsuland=> SELECT * FROM rif40_num_denom;

geography | numerator_table | numerator_description | theme_description | denominator_table | denominator_description | automatic

-----------+----------------------+-----------------------------------------------+-----------------------------------+-------------------+------------------------------------------------------------------------------------------+-----------

SAHSULAND | NUM_SAHSULAND_CANCER | cancer numerator | covering various types of cancers | POP_SAHSULAND_POP | population health file | 1

(1 row)

sahsuland=> \q

C:\Users\phamb\Documents\GitHub\rapidInquiryFacility>

Manually creating a new user

These instructions are based on rif40_production_user.sql. This uses NEWUSER and NEWDB from the CMD environment.

- Change “mydatabasename” to the name of your database, e.g. sahsuland;

- Change “mydatabasenuser” to the name of your user, e.g. peter;

- Change “mydatabasepassword” to the name of your users password;

- Validate the RIF user; connect as user postgres on the database postgres:

DO LANGUAGE plpgsql $$

BEGIN

IF current_user != 'postgres' OR current_database() != 'postgres' THEN

RAISE EXCEPTION 'rif40_production_user.sql() current_user: % and current database: % must both be postgres', current_user, current_database();

END IF;

END;

$$;

- Create Login; connect as user postgres on the database mydatabasename (.e.g. sahsuland):

DO LANGUAGE plpgsql $$

DECLARE

c2 CURSOR(l_usename VARCHAR) FOR

SELECT * FROM pg_user WHERE usename = l_usename;

c3 CURSOR FOR

SELECT CURRENT_SETTING('rif40.nnewpw') AS nnewpw,

CURRENT_SETTING('rif40.newpw') AS newpw;

c4 CURSOR(l_name VARCHAR, l_pass VARCHAR) FOR

SELECT rolpassword::Text AS rolpassword,

'md5'||md5(l_pass||l_name)::Text AS password

FROM pg_authid

WHERE rolname = l_name;

c1_rec RECORD;

c2_rec RECORD;

c3_rec RECORD;

c4_rec RECORD;

--

sql_stmt VARCHAR;

u_name VARCHAR;

u_pass VARCHAR;

u_database VARCHAR;

BEGIN

u_name:='mydatabasenuser';

u_pass:='mydatabasepassword';

u_database:='mydatabasename';

--

-- Test account exists

--

OPEN c2(u_name);

FETCH c2 INTO c2_rec;

CLOSE c2;

IF c2_rec.usename IS NULL THEN

RAISE NOTICE 'C209xx: User account does not exist: %; creating', u_name;

sql_stmt:='CREATE ROLE '||u_name||

' NOSUPERUSER NOCREATEDB NOCREATEROLE INHERIT LOGIN NOREPLICATION PASSWORD '''||

CURRENT_SETTING('rif40.newpw')||'''';

RAISE INFO 'SQL> %;', sql_stmt::VARCHAR;

EXECUTE sql_stmt;

ELSE

--

OPEN c4(u_name, u_pass);

FETCH c4 INTO c4_rec;

CLOSE c4;

IF c4_rec.rolpassword IS NULL THEN

RAISE EXCEPTION 'C209xx: User account: % has a NULL password',

c2_rec.usename;

ELSIF c4_rec.rolpassword != c4_rec.password THEN

RAISE INFO 'rolpassword: "%"', c4_rec.rolpassword;

RAISE INFO 'password(%): "%"', u_pass, c4_rec.password;

RAISE EXCEPTION 'C209xx: User account: % password (%) would change; set password correctly', c2_rec.usename, u_pass;

ELSE

RAISE NOTICE 'C209xx: User account: % password is unchanged',

c2_rec.usename;

END IF;

--

IF pg_has_role(c2_rec.usename, 'rif_user', 'MEMBER') THEN

RAISE INFO 'rif40_production_user.sql() user account="%" is a rif_user', c2_rec.usename;

ELSIF pg_has_role(c2_rec.usename, 'rif_manager', 'MEMBER') THEN

RAISE INFO 'rif40_production_user.sql() user account="%" is a rif manager', c2_rec.usename;

ELSE

RAISE EXCEPTION 'C209xx: User account: % is not a rif_user or rif_manager', c2_rec.usename;

END IF;

END IF;

--

sql_stmt:='GRANT CONNECT ON DATABASE '||u_database||' to '||u_name;

RAISE INFO 'SQL> %;', sql_stmt::VARCHAR;

EXECUTE sql_stmt;

sql_stmt:='GRANT rif_manager TO '||u_name;

RAISE INFO 'SQL> %;', sql_stmt::VARCHAR;

EXECUTE sql_stmt;

sql_stmt:='GRANT rif_user TO '||u_name;

RAISE INFO 'SQL> %;', sql_stmt::VARCHAR;

EXECUTE sql_stmt;

END;

$$;

- Create user and grant roles:

DO LANGUAGE plpgsql $$

DECLARE

sql_stmt VARCHAR;

u_name VARCHAR;

u_database VARCHAR;

BEGIN

u_name:='mydatabasenuser';

u_database:='mydatabasename';

IF user = 'postgres' AND current_database() = u_database THEN

RAISE INFO 'User check: %', user;

ELSE

RAISE EXCEPTION 'C209xx: User check failed: % is not postgres on % database (%)',

user, u_database, current_database();

END IF;

--

sql_stmt:='GRANT CONNECT ON DATABASE '||u_database||' to '||u_name;

RAISE INFO 'SQL> %;', sql_stmt::VARCHAR;

EXECUTE sql_stmt;

sql_stmt:='CREATE SCHEMA IF NOT EXISTS '||u_name||' AUTHORIZATION '||u_name;

RAISE INFO 'SQL> %;', sql_stmt::VARCHAR;

EXECUTE sql_stmt;

END;

$$;

- Change the password. The password is set to mydatabasepassword.

The user specific object views: rif40_num_denom, rif40_num_denom_errors are automatically created. These must be created as the user so they run with the users

privileges and therefore only return RIF data tables to which the user has been granted access permission.

SQL Server

Run the optional script rif40_production_user.sql. This creates a default user %newuser% with password %newpw% in database %newdb% from the command environment.

This is set from the command line using the -v newuser= -v newpw= and -v newdb= parameters. Run as *Administrator*:

sqlcmd -E -b -m-1 -e -i rif40_production_user.sql -v newuser=kevin -v newpw=XXXXXXXXXXXX -v newdb=sahsuland

- User is created with the rif_user (can create tables and views) and rif_manager roles (can also create procedures and functions);

- User can use the sahsuland database;

- Will fail to re-create a user if the user already has objects (tables, views etc);

Test connection and object privileges, access to RIF numerators and denominators:

C:\Users\Peter\Documents\GitHub\rapidInquiryFacility\rifDatabase\Postgres\psql_scripts>sqlcmd -U kevin -P XXXXXXXXXXXX

1> SELECT db_name() AS db_name INTO test_table;

2> SELECT * FROM test_table;

3> go

(1 rows affected)

db_name

--------------------------------------------------------------------------------------------------------------------------------

rif40

(1 rows affected)

1> SELECT * FROM rif40_num_denom;

2> go

geography numerator_table numerator_description theme_description denominator_table denominator_description automatic

-------------------------------------------------- ------------------------------ ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- ------------------------------ ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- ---------

SAHSULAND NUM_SAHSULAND_CANCER cancer numerator covering various types of cancers POP_SAHSULAND_POP population health file 1

(1 rows affected)

1> quit

C:\Users\Peter\Documents\GitHub\rapidInquiryFacility\rifDatabase\Postgres\psql_scripts>

Manually creating a new user

These instructions are based on rif40_production_user.sql. This uses NEWUSER and NEWDB from the CMD environment.

- Change “mydatabasename” to the name of your database, e.g. sahsuland;

- Change “mydatabasenuser” to the name of your user, e.g. peter;

- Change “mydatabasepassword” to the name of your users password;

- Validate the RIF user

USE [master];

GO

DECLARE @newuser VARCHAR(MAX)='mydatabaseuser';

DECLARE @invalid_chars INTEGER;

DECLARE @first_char VARCHAR(1);

SET @invalid_chars=PATINDEX('%[^0-9a-z_]%', @newuser);

SET @first_char=SUBSTRING(@newuser, 1, 1);

IF @invalid_chars IS NULL

RAISERROR('New username is null', 16, 1, @newuser);

ELSE IF @invalid_chars > 0

RAISERROR('New username: %s contains invalid character(s) starting at position: %i.', 16, 1,

@newuser, @invalid_chars);

ELSE IF (LEN(@newuser) > 30)

RAISERROR('New username: %s is too long (30 characters max).', 16, 1, @newuser);

ELSE IF ISNUMERIC(@first_char) = 1

RAISERROR('First character in username: %s is numeric: %s.', 16, 1, @newuser, @first_char);

ELSE

PRINT 'New username: ' + @newuser + ' OK';

GO

- Create Login

USE [master];

GO

IF NOT EXISTS (SELECT * FROM sys.sql_logins WHERE name = N'mydatabaseuser')

CREATE LOGIN [mydatabaseuser] WITH PASSWORD='mydatabasepassword', CHECK_POLICY = OFF;

GO

ALTER LOGIN [mydatabaseuser] WITH DEFAULT_DATABASE = [mydatabasename];

GO

- Creare user and grant roles

USE mydatabasename;

GO

BEGIN

IF NOT EXISTS (SELECT name FROM sys.database_principals WHERE name = N'mydatabaseuser')

CREATE USER [mydatabaseuser] FOR LOGIN [mydatabaseuser] WITH DEFAULT_SCHEMA=[dbo]

ELSE ALTER USER [mydatabaseuser] WITH LOGIN=[mydatabasepassword];

--

-- Object privilege grants

--

GRANT CREATE TABLE TO [mydatabaseuser];

GRANT CREATE VIEW TO [mydatabaseuser];

--

IF NOT EXISTS (SELECT name FROM sys.schemas WHERE name = N'mydatabaseuser')

EXEC('CREATE SCHEMA [mydatabaseuser] AUTHORIZATION [mydatabasepassword]');

ALTER USER [mydatabaseuser] WITH DEFAULT_SCHEMA=[mydatabaseuser];

ALTER ROLE rif_user ADD MEMBER [mydatabaseuser];

ALTER ROLE rif_manager ADD MEMBER [mydatabaseuser];

END;

GO

- Change the password. The password is set to mydatabasepassword.

- Create user specific object views: rif40_num_denom, rif40_num_denom_errors. These must be created as the user so they run with the users privileges and therefore only return

RIF data tables to which the user has been granted access permission.

USE mydatabasename;

GO

--

-- RIF40 num_denom, rif40_num_denom_errors

--

-- needs functions:

-- rif40_is_object_resolvable

-- rif40_num_denom_validate

-- rif40_auto_indirect_checks

--

IF EXISTS (SELECT * FROM sys.objects

WHERE object_id = OBJECT_ID(N'[mydatabaseuser].[rif40_num_denom]') AND type in (N'V'))

BEGIN

DROP VIEW [mydatabaseuser].[rif40_num_denom]

END

GO

CREATE VIEW [mydatabaseuser].[rif40_num_denom] AS

WITH n AS (

SELECT n1.geography,

n1.numerator_table,

n1.numerator_description,

n1.automatic,

n1.theme_description

FROM ( SELECT g.geography,

n_1.table_name AS numerator_table,

n_1.description AS numerator_description,

n_1.automatic,

t.description AS theme_description

FROM [rif40].[rif40_geographies] g,

[rif40].[rif40_tables] n_1,

[rif40].[rif40_health_study_themes] t

WHERE n_1.isnumerator = 1 AND n_1.automatic = 1

AND [rif40].[rif40_is_object_resolvable](n_1.table_name) = 1

AND n_1.theme = t.theme) n1

WHERE [rif40].[rif40_num_denom_validate](n1.geography, n1.numerator_table) = 1

), d AS (

SELECT d1.geography,

d1.denominator_table,

d1.denominator_description

FROM ( SELECT g.geography,

d_1.table_name AS denominator_table,

d_1.description AS denominator_description

FROM [rif40].[rif40_geographies] g,

[rif40].[rif40_tables] d_1

WHERE d_1.isindirectdenominator = 1

AND d_1.automatic = 1

AND [rif40].[rif40_is_object_resolvable](d_1.table_name) = 1) d1

WHERE [rif40].[rif40_num_denom_validate](d1.geography, d1.denominator_table) = 1

AND [rif40].[rif40_auto_indirect_checks](d1.denominator_table) IS NULL

)

SELECT n.geography,

n.numerator_table,

n.numerator_description,

n.theme_description,

d.denominator_table,

d.denominator_description,

n.automatic

FROM n,

d

WHERE n.geography = d.geography

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Numerator and indirect standardisation denominator pairs. Use RIF40_NUM_DENOM_ERROR if your numerator and denominator table pair is missing. You must have your own copy of RIF40_NUM_DENOM or you will only see the tables RIF40 has access to. Tables not rejected if the user does not have access or the table does not contain the correct geography geolevel fields.' ,

@level0type=N'SCHEMA',@level0name=N'mydatabaseuser', @level1type=N'VIEW', @level1name=N'rif40_num_denom'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Geography',

@level0type=N'SCHEMA', @level0name=N'mydatabaseuser', @level1type=N'VIEW', @level1name=N'rif40_num_denom',

@level2type=N'COLUMN',@level2name=N'geography'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Numerator table',

@level0type=N'SCHEMA', @level0name=N'mydatabaseuser', @level1type=N'VIEW', @level1name=N'rif40_num_denom',

@level2type=N'COLUMN',@level2name=N'numerator_table'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Numerator table description',

@level0type=N'SCHEMA', @level0name=N'mydatabaseuser', @level1type=N'VIEW', @level1name=N'rif40_num_denom',

@level2type=N'COLUMN',@level2name=N'numerator_description'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Numerator table health study theme description',

@level0type=N'SCHEMA', @level0name=N'mydatabaseuser', @level1type=N'VIEW', @level1name=N'rif40_num_denom',

@level2type=N'COLUMN',@level2name=N'theme_description'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Denominator table',

@level0type=N'SCHEMA', @level0name=N'mydatabaseuser', @level1type=N'VIEW', @level1name=N'rif40_num_denom',

@level2type=N'COLUMN',@level2name=N'denominator_table'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Denominator table description',

@level0type=N'SCHEMA', @level0name=N'mydatabaseuser', @level1type=N'VIEW', @level1name=N'rif40_num_denom',

@level2type=N'COLUMN',@level2name=N'denominator_description'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Is the pair automatic (0/1). Cannot be applied to direct standardisation denominator. Restricted to 1 denominator per geography. The default in RIF40_TABLES is 0 because of the restrictions.' ,

@level0type=N'SCHEMA',@level0name=N'mydatabaseuser', @level1type=N'VIEW', @level1name=N'rif40_num_denom',

@level2type=N'COLUMN',@level2name=N'automatic'

GO

IF EXISTS (SELECT * FROM sys.objects

WHERE object_id = OBJECT_ID(N'[mydatabaseuser].[rif40_num_denom_errors]') AND type in (N'V'))

BEGIN

DROP VIEW [mydatabaseuser].[rif40_num_denom_errors]

END

GO

CREATE VIEW [mydatabaseuser].[rif40_num_denom_errors] AS

WITH n AS (

SELECT n1.geography,

n1.numerator_table,

n1.numerator_description,

n1.automatic,

n1.is_object_resolvable,

n1.n_num_denom_validated,

n1.numerator_owner

FROM ( SELECT g.geography,

n_1.table_name AS numerator_table,

n_1.description AS numerator_description,

n_1.automatic,

[rif40].[rif40_is_object_resolvable](n_1.table_name) AS is_object_resolvable,

[rif40].[rif40_num_denom_validate](g.geography, n_1.table_name) AS n_num_denom_validated,

[rif40].[rif40_object_resolve](n_1.table_name) AS numerator_owner

FROM [rif40].[rif40_geographies] g,

[rif40].[rif40_tables] n_1

WHERE n_1.isnumerator = 1 AND n_1.automatic = 1) n1

), d AS (

SELECT d1.geography,

d1.denominator_table,

d1.denominator_description,

d1.is_object_resolvable,

d1.d_num_denom_validated,

d1.denominator_owner,

[rif40].[rif40_auto_indirect_checks](d1.denominator_table) AS auto_indirect_error

FROM ( SELECT g.geography,

d_1.table_name AS denominator_table,

d_1.description AS denominator_description,

[rif40].[rif40_is_object_resolvable](d_1.table_name) AS is_object_resolvable,

[rif40].[rif40_num_denom_validate](g.geography, d_1.table_name) AS d_num_denom_validated,

[rif40].[rif40_object_resolve](d_1.table_name) AS denominator_owner

FROM [rif40].[rif40_geographies] g,

[rif40].[rif40_tables] d_1

WHERE d_1.isindirectdenominator = 1 AND d_1.automatic = 1) d1

)

SELECT n.geography,

n.numerator_owner,

n.numerator_table,

n.is_object_resolvable AS is_numerator_resolvable,

n.n_num_denom_validated,

n.numerator_description,

d.denominator_owner,

d.denominator_table,

d.is_object_resolvable AS is_denominator_resolvable,

d.d_num_denom_validated,

d.denominator_description,

n.automatic,

CASE

WHEN d.auto_indirect_error IS NULL THEN 0

ELSE 1

END AS auto_indirect_error_flag,

d.auto_indirect_error /*,

f.create_status AS n_fdw_create_status,

f.error_message AS n_fdw_error_message,

f.date_created AS n_fdw_date_created,

f.rowtest_passed AS n_fdw_rowtest_passed */

FROM d,

n

WHERE n.geography = d.geography;

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'All possible numerator and indirect standardisation denominator pairs with error diagnostic fields. As this is a CROSS JOIN the will be a lot of output as tables are not rejected on the basis of user access or containing the correct geography geolevel fields.' ,

@level0type=N'SCHEMA',@level0name=N'mydatabaseuser', @level1type=N'VIEW',@level1name=N'rif40_num_denom_errors'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Geography',

@level0type=N'SCHEMA',@level0name=N'mydatabaseuser', @level1type=N'VIEW',@level1name=N'rif40_num_denom_errors',

@level2type=N'COLUMN',@level2name=N'geography'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Numerator table owner' ,

@level0type=N'SCHEMA',@level0name=N'mydatabaseuser', @level1type=N'VIEW',@level1name=N'rif40_num_denom_errors',

@level2type=N'COLUMN',@level2name=N'numerator_owner'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Numerator table' ,

@level0type=N'SCHEMA',@level0name=N'mydatabaseuser', @level1type=N'VIEW',@level1name=N'rif40_num_denom_errors',

@level2type=N'COLUMN',@level2name=N'numerator_table'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Is the numerator table resolvable and accessible (0/1)' ,

@level0type=N'SCHEMA',@level0name=N'mydatabaseuser', @level1type=N'VIEW',@level1name=N'rif40_num_denom_errors',

@level2type=N'COLUMN',@level2name=N'is_numerator_resolvable'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Is the numerator valid for this geography (0/1). If N_NUM_DENOM_VALIDATED and D_NUM_DENOM_VALIDATED are both 1 then the pair will appear in RIF40_NUM_DENOM.',

@level0type=N'SCHEMA',@level0name=N'mydatabaseuser', @level1type=N'VIEW',@level1name=N'rif40_num_denom_errors',

@level2type=N'COLUMN',@level2name=N'n_num_denom_validated'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Numerator table description',

@level0type=N'SCHEMA',@level0name=N'mydatabaseuser', @level1type=N'VIEW',@level1name=N'rif40_num_denom_errors',

@level2type=N'COLUMN',@level2name=N'numerator_description'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Denominator table owner',

@level0type=N'SCHEMA',@level0name=N'mydatabaseuser', @level1type=N'VIEW',@level1name=N'rif40_num_denom_errors',

@level2type=N'COLUMN',@level2name=N'denominator_owner'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Denominator table',

@level0type=N'SCHEMA',@level0name=N'mydatabaseuser', @level1type=N'VIEW',@level1name=N'rif40_num_denom_errors',

@level2type=N'COLUMN',@level2name=N'denominator_table'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Is the denominator table resolvable and accessible (0/1)',

@level0type=N'SCHEMA',@level0name=N'mydatabaseuser', @level1type=N'VIEW',@level1name=N'rif40_num_denom_errors',

@level2type=N'COLUMN',@level2name=N'is_denominator_resolvable'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Is the denominator valid for this geography (0/1). If N_NUM_DENOM_VALIDATED and D_NUM_DENOM_VALIDATED are both 1 then the pair will appear in RIF40_NUM_DENOM.',

@level0type=N'SCHEMA',@level0name=N'mydatabaseuser', @level1type=N'VIEW',@level1name=N'rif40_num_denom_errors',

@level2type=N'COLUMN',@level2name=N'd_num_denom_validated'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Denominator table description',

@level0type=N'SCHEMA',@level0name=N'mydatabaseuser', @level1type=N'VIEW',@level1name=N'rif40_num_denom_errors',

@level2type=N'COLUMN',@level2name=N'denominator_description'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Is the pair automatic (0/1). Cannot be applied to direct standardisation denominator. Restricted to 1 denominator per geography. The default in RIF40_TABLES is 0 because of the restrictions.',

@level0type=N'SCHEMA',@level0name=N'mydatabaseuser', @level1type=N'VIEW',@level1name=N'rif40_num_denom_errors',

@level2type=N'COLUMN',@level2name=N'automatic'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Error flag 0/1. Denominator table with automatic set to "1" that fails the RIF40_CHECKS.RIF40_AUTO_INDIRECT_CHECKS test. Restricted to 1 denominator per geography to prevent the automatic RIF40_NUM_DENOM having >1 pair per numerator.',

@level0type=N'SCHEMA',@level0name=N'mydatabaseuser', @level1type=N'VIEW',@level1name=N'rif40_num_denom_errors',

@level2type=N'COLUMN',@level2name=N'auto_indirect_error_flag'

GO

EXEC sys.sp_addextendedproperty @name=N'MS_Description',

@value=N'Denominator table with automatic set to "1" that fails the RIF40_CHECKS.RIF40_AUTO_INDIRECT_CHECKS test. Restricted to 1 denominator per geography to prevent the automatic RIF40_NUM_DENOM having >1 pair per numerator. List of geographies and tables in error.',

@level0type=N'SCHEMA',@level0name=N'mydatabaseuser', @level1type=N'VIEW',@level1name=N'rif40_num_denom_errors',

@level2type=N'COLUMN',@level2name=N'auto_indirect_error'

GO

Changing passwords

Valid characters for passwords have been tested as: [A-Z][a-z][0-9]!@$^~_-. Passwords must be up to 30 characters long; longer passwords may be supported. The following are definitely NOT

valid: SQL Server/ODBC special characters: []{}(),;?*=!@.

Use of special characters]: \/&% is not advised as command line users will need to use an escaping URI to connect.

Postgres

The file .pgpass in a user’s home directory or the file referenced by PGPASSFILE can contain passwords to be used if the connection requires a password

(and no password has been specified otherwise). On Microsoft Windows the file is named %APPDATA%\postgresql\pgpass.conf (where %APPDATA% refers to the

Application Data subdirectory in the user’s profile).

This file should contain lines of the following format:

hostname:port:database:username:password

You can add a comment to the file by preceding the line with #. Each of the first four fields can be a literal value, or *, which matches anything. The password field from the first line

that matches the current connection parameters will be used. (Therefore, put more-specific entries first when you are using wildcards.)

If an entry needs to contain : or \, escape this character with . A host name of localhost matches both TCP (host name localhost) and Unix domain socket

(pghost empty or the default socket directory) connections coming from the local machine.

On Unix systems, the permissions on .pgpass must disallow any access to world or group; achieve this by the command chmod 0600 ~/.pgpass. If the permissions are less strict

than this, the file will be ignored. On Microsoft Windows, it is assumed that the file is stored in a directory that is secure, so no special permissions check is made.

If you change a users password and you use the user from the command line (typically postgres, rif40 and the test username) change it in the <pgpass> file.

Notes:

- Your normal user and the administrator account will have separate <pgpass> files;

- The postgres account password is set by the Postgres (EnterpriseDB) installler. This password is not set in the Administrator <pgpass> file.;

- The rif40 and the test username are both set by the database install script to <username><5 digit random number><5 digit random number>.

These passwords are set in the Administrator <pgpass> file.;

- No passwords are set in the normal user <pgpass> file;

To change a Postgres password:

ALTER ROLE rif40 WITH PASSWORD 'XXXXXXXX';

SQL Server

To change a SQL server password:

ALTER LOGIN rif40 WITH PASSWORD = 'XXXXXXXX';

GO

Proxy accounts

Proxy accounts are of use to the RIF as it can allow a normal user to login as a schema owner. Good practice is not the set the schema owner passwords (e.g. rif40) as these tend to be known by

several people and tend to get written down as these accounts are infrequently used. Proxy accounts allow for privilege minimisation. Importantly the use proxy accounts is fully audited, in

particular the privilege escalation (i.e. use of the proxy).

The SAHSU Private network uses proxying for these reasons.

The RIF front end application and middleware use user name and passwords to authenticate. Therefore federated mechanism such as Kerberos and

SSPI (Windows authentication) will not work; and would require

a GSSAPI implementation in the middleware. Invariably substantial browser and server key

set-up is required and this is very difficult to set up (some years ago the SAHSU private network used this for five years; the experiment was not repeated).

Postgres

If you need to integrate into you Active Directory or authentication services you are advised to use LDAP.

This permits user name and password authentication; ldap does not support proxying. Login to the database using the command lines can then use SSPI and this can then be proxied

to allow schema access. See Postgres LDAP Authentication and

LDAP Authentication against AD

Postgres proxy accounts are controlled by pg_ident.conf in the Postgres data directory. See

Postgres Client Authentication

The map name must be one of following mappable methods from hba.conf (i.e. that support proxying):

- ident: Identification Protocol as described in RFC 1413 (INSECURE: DO NOT USE)

- peer: Peer authentication is only available on operating systems providing the getpeereid() function, the SO_PEERCRED socket parameter, or similar mechanisms. Currently that includes Linux, most flavors of BSD including OS X, and Solaris.

- gss: GSSAPI/Kerberos

- pam: Linux PAM (Pluggable authentication modules)

- sspi: Windows native autentiation (NTLM V2)

- cert: Uses SSL client certificates to perform authentication. It is therefore only available for SSL connections.

The Windows installer guide for Postgres has examples:

So, if I setup SSPI as per the examples to use SSPI in hba.conf:

#

# Active directory GSSAPI connections with pg_ident.conf maps for schema accounts

#

hostssl sahsuland all 127.0.0.1/32 sspi map=sahsuland

hostssl sahsuland all ::1/128 sspi map=sahsuland

hostssl sahsuland_dev all 127.0.0.1/32 sspi map=sahsuland_dev

hostssl sahsuland_dev all ::1/128 sspi map=sahsuland_dev

With the maps sahsuland and sahsuland_dev defined in ident.conf:

# MAPNAME SYSTEM-USERNAME PG-USERNAME

#

sahsuland pch pop

sahsuland pch gis

sahsuland pch rif40

sahsuland pch pch

#

sahsuland_dev pch pop

sahsuland_dev pch gis

sahsuland_dev pch rif40

sahsuland_dev pch pch

sahsuland_dev pch postgres

Set the RIF40 password to an impossible value:

ALTER ROLE rif40 WITH PASSWORD 'md5ac4bbe016b8XXXXXXXXXX6981f240dcae';

Finally, optioanlly add the passwords to the

Pgpass file.

I can then logon as rif40 using SSPI:

C:\Users\phamb\OneDrive\SEER Data>psql -U rif40

You are connected to database "sahsuland" as user "rif40" on host "localhost" at port "5432".

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: rif40_log_setup() DEFAULTED send DEBUG to INFO: off; debug function list: []

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00000.01s rif40_startup(): search_path not set for: rif40

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00000.01s rif40_startup(): SQL> DROP FUNCTION IF EXISTS rif40.rif40_run_study(INTEGER, INTEGER);

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: NOTICE: function rif40.rif40_run_study(pg_catalog.int4,pg_catalog.int4) does not exist, skipping

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00000.06s rif40_startup(): Created temporary table: g_rif40_study_areas

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00000.08s rif40_startup(): Created temporary table: g_rif40_comparison_areas

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00000.15s rif40_startup(): PostGIS extension V2.3.5 (POSTGIS="2.3.5 r16110" GEOS="3.6.2-CAPI-1.10.2 4d2925d" PROJ="Rel. 4.9.3, 15 August 2016" GDAL="GDAL 2.2.2, released 2017/09/15" LIBXML="2.7.8" LIBJSON="0.12" RASTER)

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00000.15s rif40_startup(): FDW functionality disabled - FDWServerName, FDWServerType, FDWDBServer RIF parameters not set.

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00000.15s rif40_startup(): V$Revision: 1.11 $ DB version $Revision: 1.11 $ matches

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00000.15s rif40_startup(): V$Revision: 1.11 $ rif40_geographies, rif40_tables, rif40_health_study_themes exist for user: rif40

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00000.15s rif40_startup(): search_path: public, topology, gis, pop, rif_data, data_load, rif40_sql_pkg, rif_studies, rif40_partitions, reset: rif40, public, topology, gis, pop, rif_data, data_load, rif40_sql_pkg, rif_studies, rif40_partitions

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00000.16s rif40_startup(): Deleted 0, created 2 tables/views/foreign data wrapper tables

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: SQL> SET search_path TO rif40, public, topology, gis, pop, rif_data, data_load, rif40_sql_pkg, rif_studies, rif40_partitions;

DO

psql (9.6.8)

Type "help" for help.

sahsuland=>

SQL Server

This needs to be investigated as it is not certain SQL Server has the correct functionality and the setup would need to be trialled. See:

Granting permission

The RIF is setup so that three roles control access to the application:

- rif_user: User level access to the application with full access to data at the highest resolution.

No ability to change the RIF configuration or to add more data;

- rif_manager: Manager level access to the application with full access to data at the highest resolution.

Ability to change the RIF configuration. No ability by default to add data to the RIF. Data is normally added

using the schema owner account (rif40); see the above section on proxying to access the schema account user

a manager accounts credentials.

- rif_student. Restricted access to the application with controlled access to data at the higher resolutions.

No ability to change the RIF configuration or to add more data;

Access to data is controlled by the permissions granted to that data and not by the RIF.

- In the SAHSULAND example database data access is granted to rif_user, rif_manager and rif_student.

- In the SEER dataset data access is granted to seer_user.

Postgres

To create the SEER_USER role and grant it to a user (peter) logon as the administrator (postgres):

psql -U postgres -d postgres

CREATE ROLE seer_user;

GRANT seer_user TO peter;

SQL Server

To create the SEER_USER role and grant it to a user (peter) logon as the administrator in an Administrator

cmd window:

sqlcmd -E

USE sahsuland;

IF DATABASE_PRINCIPAL_ID('seer_user') IS NULL

CREATE ROLE [seer_user];

SELECT name, type_desc FROM sys.database_principals WHERE name LIKE '%seer_user%';

ALTER ROLE [seer_user] ADD MEMBER [peter];

GO

Viewing your user setup

Postgres

To view roles, privileges and role membership:

C:\Users\phamb\OneDrive\SEER Data>psql -U postgres -d sahsuland

You are connected to database "sahsuland" as user "postgres" on host "localhost" at port "5432".

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: +00000.12s rif40_startup(): disabled - user postgres is not or has rif_user or rif_manager role

psql:C:/Program Files/PostgreSQL/9.6/etc/psqlrc:48: INFO: SQL> SET search_path TO postgres,rif40, public, topology, gis, pop, rif_data, data_load, rif40_sql_pkg, rif_studies, rif40_partitions;

DO

psql (9.6.8)

Type "help" for help.

sahsuland-# \du

List of roles

Role name | Attributes | Member of

-------------------------+------------------------------------------------------------+----------------------------------

gis | | {}

kevin | | {rif_manager,rif_user}

notarifuser | | {}

peter | | {rif_manager,rif_user,seer_user}

pop | | {}

postgres | Superuser, Create role, Create DB, Replication, Bypass RLS | {}

rif40 | | {}

rif_manager | Cannot login | {}

rif_no_suppression | Cannot login | {}

rif_student | Cannot login | {}

rif_user | Cannot login | {}

rifupg34 | Cannot login | {}

seer_user | Cannot login | {}

test_rif_manager | | {rif_manager}

test_rif_no_suppression | | {rif_no_suppression}

test_rif_student | | {rif_student}

test_rif_user | | {rif_user}

To view roles and permissions granted to an object:

sahsuland-# \dp rif40_tables

Access privileges

Schema | Name | Type | Access privileges | Column privileges | Policies

--------+--------------+-------+------------------------+-------------------+----------

rif40 | rif40_tables | table | rif40=arwdDxt/rif40 +| |

| | | rif_manager=arwd/rif40+| |

| | | =rx/rif40 | |

(1 row)

Where the access privileges (+ is a line continuation character) are:

- r: SELECT (“read”)

- w: UPDATE (“write”)

- a: INSERT (“append”)

- d: DELETE

- D: TRUNCATE

- x: REFERENCES

- t: TRIGGER

- X: EXECUTE

- U: USAGE

- C: CREATE

- c: CONNECT

- T: TEMPORARY

SQL Server

To view roles, as an administrator sqlcmd -E -d sahsuland:

SELECT name, type_desc, default_schema_name, authentication_type_desc

FROM sys.database_principals;

GO

name type_desc default_schema_name authentication_type_desc

-------------------------------------------------------------------------------------------------------------------------------- ------------------------------------------------------------ -------------------------------------------------------------------------------------------------------------------------------- ------------------------------------------------------------

public DATABASE_ROLE NULL NONE

dbo WINDOWS_USER dbo WINDOWS

guest SQL_USER guest NONE

INFORMATION_SCHEMA SQL_USER NULL NONE

sys SQL_USER NULL NONE

rif40 SQL_USER rif40 INSTANCE

rif_manager DATABASE_ROLE NULL NONE

rif_user DATABASE_ROLE NULL NONE

rif_student DATABASE_ROLE NULL NONE

rif_no_suppression DATABASE_ROLE NULL NONE

notarifuser DATABASE_ROLE NULL NONE

peter SQL_USER peter INSTANCE

seer_user DATABASE_ROLE NULL NONE

db_owner DATABASE_ROLE NULL NONE

db_accessadmin DATABASE_ROLE NULL NONE

db_securityadmin DATABASE_ROLE NULL NONE

db_ddladmin DATABASE_ROLE NULL NONE

db_backupoperator DATABASE_ROLE NULL NONE

db_datareader DATABASE_ROLE NULL NONE

db_datawriter DATABASE_ROLE NULL NONE

db_denydatareader DATABASE_ROLE NULL NONE

db_denydatawriter DATABASE_ROLE NULL NONE

(22 rows affected)

To view server roles, as an administrator sqlcmd -E -d sahsuland:

SELECT sys.server_role_members.role_principal_id, role.name AS RoleName,

sys.server_role_members.member_principal_id, member.name AS MemberName

FROM sys.server_role_members

JOIN sys.server_principals AS role

ON sys.server_role_members.role_principal_id = role.principal_id

JOIN sys.server_principals AS member

ON sys.server_role_members.member_principal_id = member.principal_id;

GO

role_principal_id RoleName member_principal_id MemberName

----------------- ------------- ------------------- --------------------------------------------------------------------------------------------------------------------------------

3 sysadmin 1 sa

3 sysadmin 259 DESKTOP-4P2SA80\admin

3 sysadmin 260 NT SERVICE\SQLWriter

3 sysadmin 261 NT SERVICE\Winmgmt

3 sysadmin 262 NT Service\MSSQLSERVER

3 sysadmin 264 NT SERVICE\SQLSERVERAGENT

10 bulkadmin 286 rif40

10 bulkadmin 287 peter

(8 rows affected)

To view role membership, as an administrator sqlcmd -E -d sahsuland:

SELECT DP1.name AS DatabaseRoleName, isnull (DP2.name, 'No members') AS DatabaseUserName

FROM sys.database_role_members AS DRM

RIGHT OUTER JOIN sys.database_principals AS DP1

ON DRM.role_principal_id = DP1.principal_id

LEFT OUTER JOIN sys.database_principals AS DP2

ON DRM.member_principal_id = DP2.principal_id

WHERE DP1.type = 'R'

ORDER BY DP1.name;

DatabaseRoleName DatabaseUserName

-------------------------------------------------------------------------------------------------------------------------------- -----------------

db_accessadmin No members

db_backupoperator No members

db_datareader No members

db_datawriter No members

db_ddladmin No members

db_denydatareader No members

db_denydatawriter No members

db_owner dbo

db_securityadmin No members

notarifuser No members

public No members

rif_manager peter

rif_no_suppression No members

rif_student No members

rif_user peter

seer_user peter

(16 rows affected)

To view roles and permissions granted to an object, as an administrator sqlcmd -E -d sahsuland:

sp_helprotect @username = 'peter'

GO

Owner Object Grantee Grantor ProtectType Action Column

---------------------- -------------------- ---------- ---------- ----------- -------------------------------- ------------------

rif_studies s1_extract peter rif40 Grant Delete .

rif_studies s1_extract peter rif40 Grant Insert .

rif_studies s1_extract peter rif40 Grant Select (All+New)

rif_studies s1_map peter rif40 Grant Insert .

rif_studies s1_map peter rif40 Grant Select (All+New)

rif_studies s1_map peter rif40 Grant Update (All+New)

rif_studies s2_extract peter rif40 Grant Delete .

rif_studies s2_extract peter rif40 Grant Insert .

rif_studies s2_extract peter rif40 Grant Select (All+New)

rif_studies s2_map peter rif40 Grant Insert .

rif_studies s2_map peter rif40 Grant Select (All+New)

rif_studies s2_map peter rif40 Grant Update (All+New)

rif_studies s3_extract peter rif40 Grant Delete .

rif_studies s3_extract peter rif40 Grant Insert .

rif_studies s3_extract peter rif40 Grant Select (All+New)

rif_studies s3_map peter rif40 Grant Insert .

rif_studies s3_map peter rif40 Grant Select (All+New)

rif_studies s3_map peter rif40 Grant Update (All+New)

rif_studies s4_extract peter rif40 Grant Delete .

rif_studies s4_extract peter rif40 Grant Insert .

rif_studies s4_extract peter rif40 Grant Select (All+New)

rif_studies s4_map peter rif40 Grant Insert .

rif_studies s4_map peter rif40 Grant Select (All+New)

rif_studies s4_map peter rif40 Grant Update (All+New)

rif_studies s5_extract peter rif40 Grant Delete .

rif_studies s5_extract peter rif40 Grant Insert .

rif_studies s5_extract peter rif40 Grant Select (All+New)

rif_studies s5_map peter rif40 Grant Insert .

rif_studies s5_map peter rif40 Grant Select (All+New)

rif_studies s5_map peter rif40 Grant Update (All+New)

. . peter dbo Grant CONNECT .

. . peter dbo Grant Create Function .

. . peter dbo Grant Create Procedure .

. . peter dbo Grant Create Table .

. . peter dbo Grant Create View .

. . peter dbo Grant SHOWPLAN .

(36 rows affected)

Data Management

Creating new schemas

The RIF install scripts create all the schemas required by the RIF. SQL Server does not have a search path or SYNONYNs so all schemas are hard coded.

Postgres

See: CREATE SCHEMA. Normally schema schema is owned by a role (e.g. rif40) and then

access is granted as required to other roles. New schemas will needs to be added to the default search path either for the roles or possibly at the system level.

Care needs to be taken *NOT to break the RIF. The default search path for a RIF database isL:

ALTER DATABASE sahsuland SET search_path TO rif40, public, topology, gis, pop, rif_data, data_load, rif40_sql_pkg, rif_studies, rif40_partitions;

DO NOT MOVE RIF objects in the following schemas without extensive testing for hard coded schemas:

- rif40, rif_data, rif40_sql_pkg, rif_studies

The users schema is prepended to the search path on login:

sahsuland=> show search_path;

search_path

-------------------------------------------------------------------------------------------------------------

peter, rif40, public, topology, gis, pop, rif_data, data_load, rif40_sql_pkg, rif_studies, rif40_partitions

(1 row)

THEREFORE BEWARE OF CREATING OBJECTS WITH THE SAME NAME AS A RIF OBJECT on Postgres. They will be used in preference to the RIF40 schema object!

SQL Server

SQL Server does not have a search path or SYNONYNs so all schemas are hard coded and locations should NOT be changed.

Tablespaces

Postgres

Tablespaces in PostgreSQL allow database administrators to define locations in the file system where the files representing database objects can be stored. Once created, a

tablespace can be referred to by name when creating database objects.

By using tablespaces, an administrator can control the disk layout of a PostgreSQL installation. This is useful in at least two ways. First, if the partition or volume on

which the cluster was initialized runs out of space and cannot be extended, a tablespace can be created on a different partition and used until the system can be reconfigured.

Second, tablespaces allow an administrator to use knowledge of the usage pattern of database objects to optimize performance. For example, an index which is very heavily used

can be placed on a very fast, highly available disk, such as an expensive solid state device. At the same time a table storing archived data which is rarely used or not performance critical could be stored on a less expensive, slower disk system.

See: Tablespaces

SQL Server

SQL Server does not have the concept of tablespaces.

Partitioning

The SAHSU version 3.1 RIF was extensively partitioned; in particular the calculation tables and the result table rif_results needed to be partitioned on system that had run thousands of

studies will many millions of result rows and billions of extract calculation rows. Hash partitioning was retro fitted to the RIF calculation and results tables and this gave a useful

performance gain.

The new V4.0 RIF uses separate extract and results tables so does not need partitioning of the internal tables. The geometry tables on Postgres are partitioned and this gave a useful

performance gain.

The SAHSU Oracle database performance has benefited from:

- Complete partitioning of all health, population and covariate data;

- Allowing the use of limited parallelisation in queries, inserts and index creation;

- Use of index organised denominator and covariate tables. Note that by default all tables are index organised on SQL Server;

The RIF currently [deliberately] extracts data year by year and so explicit disables effective parallelisation in the extract.

Postgres

Currently only the geometry tables, e.g. rif_data.geometry_sahsuland are partitioned using inheritance and custom triggers.

Postgres 10 has native support for partitioning, see: Postgres 10 partitioning.

The implementation is still incomplete and the following limitations apply to partitioned tables:

- There is no facility available to create the matching indexes on all partitions automatically. Indexes must be added to each partition with separate commands. This also means that

there is no way to create a primary key, unique constraint, or exclusion constraint spanning all partitions; it is only possible to constrain each leaf partition individually.

- Since primary keys are not supported on partitioned tables, foreign keys referencing partitioned tables are not supported, nor are foreign key references from a partitioned table

to some other table.

- Using the ON CONFLICT clause with partitioned tables will cause an error, because unique or exclusion constraints can only be created on individual partitions. There is no support

for enforcing uniqueness (or an exclusion constraint) across an entire partitioning hierarchy.

- An UPDATE that causes a row to move from one partition to another fails, because the new value of the row fails to satisfy the implicit partition constraint of the original partition.

- Row triggers, if necessary, must be defined on individual partitions, not the partitioned table.

See Postgres Patching

for a description of historic Postgres partitioning. The partitioning on the geometry tables uses the range

partitioning schema but generates the code directly. This functionality is part of the tile maker.

The following partitioning limitations are scheduled to be fixed in Postgres 11:

- Executor-stage partition pruning or faster child table pruning or parallel partition processing (i.e. partition elimination using bind variables). This in particular will effect the

RIF as the year by year extract uses bind variables and will probably not partition eliminate correctly;

- Hash partitioning;

- UPDATEs that cause rows to move from one partition to another;

- Support for routing tuples to partitions that are foreign tables;

- Support for index constraints, such as UNIQUE, across the entire partition tree; indexes need to be defined on the individual leaf partitions (unique indexes span only the individual partitions);

- Support for referencing regular tables from partitioned parent tables;

- Support for “catch-all” / “fallback” / “default” partition.

There is no support currently planned for:

- Referencing partitioned parent tables in foreign key relationships;

- “Splitting” or “merging” partitions using dedicated commands;

- Automatic creation of partitions (e.g. for values not covered).

An example of Postgres 10 native partitioning. With Postgres each partition has to be created manually:

--

-- Create EWS2011_POPULATION denominator table

--

CREATE UNLOGGED TABLE rif_data.ews2011_population

(

year INTEGER NOT NULL,

coa2011 VARCHAR(10) NOT NULL,

age_sex_group INTEGER NOT NULL, -- RIF age_sex_group 1 (21 bands),

population NUMERIC(5,1) NULL,

cntry2011 VARCHAR(10) NOT NULL,

gor2011 VARCHAR(10) NOT NULL,

ladua2011 VARCHAR(10) NOT NULL,

msoa2011 VARCHAR(10) NOT NULL,

lsoa2011 VARCHAR(10) NOT NULL,

scntry2011 VARCHAR(10) NOT NULL

) PARTITION BY LIST (year);

--

-- Create partitions

--

DO LANGUAGE plpgsql $$

DECLARE

sql_stmt VARCHAR;

i INTEGER;

BEGIN

FOR i IN 1981 .. 2014 LOOP

sql_stmt:='CREATE TABLE rif_data.ews2011_population_y'||i||' PARTITION OF rif_data.ews2011_population

FOR VALUES IN ('||i||')';

--

RAISE INFO 'SQL> %;', sql_stmt::VARCHAR;

EXECUTE sql_stmt;

END LOOP;

END;

$$;

--

-- Load data using \copy

--

\copy rif_data.ews2011_population FROM 'ews2011_population.csv' WITH CSV HEADER;

DO LANGUAGE plpgsql $$

DECLARE

sql_stmt VARCHAR;

i INTEGER;

BEGIN

FOR i IN 1981 .. 2014 LOOP

--

-- Add constraints

--

sql_stmt:='ALTER TABLE rif_data.ews2011_population_y'||i||' ADD CONSTRAINT ews2011_population_y'||i||'_pk'||

' PRIMARY KEY (coa2011, age_sex_group)';

RAISE INFO 'SQL> %;', sql_stmt::VARCHAR;

EXECUTE sql_stmt;

sql_stmt:='ALTER TABLE rif_data.ews2011_population_y'||i||' ADD CONSTRAINT ews2011_population_y'||i||'_asg_ck'||

' CHECK (age_sex_group >= 100 AND age_sex_group <= 121 OR age_sex_group >= 200 AND age_sex_group <= 221)';

RAISE INFO 'SQL> %;', sql_stmt::VARCHAR;

EXECUTE sql_stmt;

--

-- Convert to index organised table

--

sql_stmt:='CLUSTER rif_data.ews2011_population_y'||i||' USING ews2011_population_y'||i||'_pk';

RAISE INFO 'SQL> %;', sql_stmt::VARCHAR;

EXECUTE sql_stmt;

--

-- Indexes

--

sql_stmt:='CREATE INDEX ews2011_population_y'||i||'_age_sex_group'||

' ON rif_data.ews2011_population_y'||i||'(age_sex_group)';

RAISE INFO 'SQL> %;', sql_stmt::VARCHAR;

EXECUTE sql_stmt;

sql_stmt:='CREATE INDEX ews2011_population_y'||i||'_scntry2011'||

' ON rif_data.ews2011_population_y'||i||'(scntry2011)';

RAISE INFO 'SQL> %;', sql_stmt::VARCHAR;

EXECUTE sql_stmt;

sql_stmt:='CREATE INDEX ews2011_population_y'||i||'_cntry2011'||

' ON rif_data.ews2011_population_y'||i||'(cntry2011)';

RAISE INFO 'SQL> %;', sql_stmt::VARCHAR;

EXECUTE sql_stmt;

sql_stmt:='CREATE INDEX ews2011_population_y'||i||'_gor2011'||

' ON rif_data.ews2011_population_y'||i||'(gor2011)';

RAISE INFO 'SQL> %;', sql_stmt::VARCHAR;

EXECUTE sql_stmt;

sql_stmt:='CREATE INDEX ews2011_population_y'||i||'_ladua2011'||

' ON rif_data.ews2011_population_y'||i||'(ladua2011)';

RAISE INFO 'SQL> %;', sql_stmt::VARCHAR;

EXECUTE sql_stmt;

sql_stmt:='CREATE INDEX ews2011_population_y'||i||'_msoa2011'||

' ON rif_data.ews2011_population_y'||i||'(msoa2011)';

RAISE INFO 'SQL> %;', sql_stmt::VARCHAR;

EXECUTE sql_stmt;

sql_stmt:='CREATE INDEX ews2011_population_y'||i||'_lsoa2011'||

' ON rif_data.ews2011_population_y'||i||'(lsoa2011)';

RAISE INFO 'SQL> %;', sql_stmt::VARCHAR;

EXECUTE sql_stmt;

--

-- Analyze

--

sql_stmt:='ANALYZE rif_data.ews2011_population_y'||i;

RAISE INFO 'SQL> %;', sql_stmt::VARCHAR;

EXECUTE sql_stmt;

END LOOP;

END;

$$;

SQL Server

SQL Server supports table and index partitioning, see Partitioned Tables and Indexes

Beware of the SQL Server partitioning and licensing conditions;

you may need a full enterprise license.

An example of SQL Server native partitioning:

- Create the partition function and scheme as an administrator:

CREATE PARTITION FUNCTION [pf_ews2011_population_year](SMALLINT) AS RANGE LEFT FOR VALUES (

1981, 1982, 1983, 1984, 1985, 1986, 1987, 1988, 1989,

1990, 1991, 1992, 1993, 1994, 1995, 1996, 1997, 1998, 1999,

2000, 2001, 2002, 2003, 2004, 2005, 2006, 2007, 2008, 2009,

2010, 2011, 2012, 2013, 2014);

GO

CREATE PARTITION SCHEME [pf_ews2011_population_year] AS PARTITION [pf_ews2011_population_year] ALL TO ([PRIMARY])

GO

- Create the objects as the schema owner:

--

-- Create EWS2011_POPULATION denominator table

--

CREATE TABLE rif_data.ews2011_population

(

year SMALLINT NOT NULL,

coa2011 VARCHAR(10) NOT NULL,

age_sex_group INTEGER NOT NULL, -- RIF age_sex_group 1 (21 bands)

population NUMERIC(5,1),

cntry2011 VARCHAR(10) NOT NULL,

gor2011 VARCHAR(10) NOT NULL,

ladua2011 VARCHAR(10) NOT NULL,

lsoa2011 VARCHAR(10) NOT NULL,

msoa2011 VARCHAR(10) NOT NULL,

scntry2011 VARCHAR(2) NOT NULL,

CONSTRAINT ews2011_population_pk PRIMARY KEY (year, coa2011, age_sex_group)

) ON pf_ews2011_population_year (year) WITH (DATA_COMPRESSION = PAGE);

GO

--

-- Load data using BULK INSERT

--

BULK INSERT rif_data.ews2011_population

FROM '$(pwd)\ews2011_population.csv' -- Note use of pwd; set via -v pwd="%cd%" in the sqlcmd command line

WITH

(

FIRSTROW = 2,

FORMATFILE = '$(pwd)\ews2011_population.fmt', -- Use a format file

TABLOCK -- Table lock

);

GO

--

-- Enable constraints

--

ALTER TABLE rif_data.ews2011_population ADD CONSTRAINT ews2011_population_asg_ck CHECK (age_sex_group >= 100 AND age_sex_group <= 121 OR age_sex_group >= 200 AND age_sex_group <= 221);

GO

--

-- Partitioned Indexes

--

CREATE INDEX ews2011_population_age_sex_group ON rif_data.ews2011_population(age_sex_group) ON pf_ews2011_population_year (year);

GO

CREATE INDEX ews2011_population_ews2011_cntry2011 ON rif_data.ews2011_population (cntry2011) ON pf_ews2011_population_year (year);

GO

CREATE INDEX ews2011_population_ews2011_gor2011 ON rif_data.ews2011_population (gor2011) ON pf_ews2011_population_year (year);

GO

CREATE INDEX ews2011_population_ews2011_ladua2011 ON rif_data.ews2011_population (ladua2011) ON pf_ews2011_population_year (year);

GO

CREATE INDEX ews2011_population_ews2011_lsoa2011 ON rif_data.ews2011_population (lsoa2011) ON pf_ews2011_population_year (year);

GO

CREATE INDEX ews2011_population_ews2011_msoa2011 ON rif_data.ews2011_population (msoa2011) ON pf_ews2011_population_year (year);

GO

Granting permission

Tables or views may be granted directly to the user or indirectly via a role. Good administration proactive is to grant via a role.

To grant via a role, you must first create a role. for example create and GRANT seer_user role to a user peter:

Postgres

Logon as the Postgres saperuser postgres” or other role with the *superuser privilege.

psql -U postgres -d postgres

CREATE ROLE seer_user;

GRANT seer_user TO peter;

There is no CREATE ROLE IF NOT EXIST.

DO $$

BEGIN

IF NOT EXISTS (SELECT 1 FROM pg_roles WHERE rolname = 'seer_user') THEN

CREATE ROLE seer_user;

END IF;

END

$$;

To view all roles: SELECT * FROM pg_roles;

SQL Server

sqlcmd -E

USE sahsuland;

IF DATABASE_PRINCIPAL_ID('seer_user') IS NULL

CREATE ROLE [seer_user];

ALTER ROLE [seer_user] ADD MEMBER [peter];

GO

To view all roles: SELECT name, type_desc FROM sys.database_principals;

Remote Database Access

The RIF supports SQL/MED SQL Management of External Data

Postgres with a remote Oracle database

The uses the Oracle foreign data wrapper with downloads for Windows at:

(https://github.com/laurenz/oracle_fdw/releases/tag/ORACLE_FDW_2_1_0).

On Windows you may get: ERROR: could not load library “C:/POSTGR~1/pg10/../pg10/lib/postgresql/oracle_fdw.dll”: The specified module could not

be found. There us a good article explaining what to do at: Cannot load oracle_fdw.dll under Windows Server 2012 R2 #160. The key

issue is the path:

- The Postgres bin directory: *C:\PostgreSQL\pg10\bin”;

- The extension folder: *C:\PostgreSQL\pg10\lib\postgresql”;

- The Oracle instant client directory (I choose: C:\Program Files\Oracle Instant Client\instantclient_18_3);

The local Postgres environment setup file C:\PostgreSQL\pg10\pg10-env.bat was changed from:

set PATH=C:\POSTGR~1\pg10\bin;%PATH%

To:

set PATH=C:\POSTGR~1\pg10\bin;C:\POSTGR~1\pg10\lib\postgresql;C:\PROGRA~1\ORACLE~1\INSTAN~1;%PATH%

The server was then restarted. Note that the path is in the old DOS format.

-

Create the extension as Postgres in the production database:

CREATE EXTENSION oracle_fdw;

-

Test extension. Note the Oracle instant client:

SELECT oracle_diag();

oracle_diag

-------------------------------------------------------------

oracle_fdw 2.1.0, PostgreSQL 10.5, Oracle client 18.3.0.0.0

(1 row)

-

Create a remote server and grant access to RIF users:

CREATE SERVER oradb FOREIGN DATA WRAPPER oracle_fdw

OPTIONS (dbserver '//dbserver.mydomain.com:1521/ORADB');

GRANT USAGE ON FOREIGN SERVER oradb TO rif_user, rif_manager;

-

Create a remote user. This will not normally be a schema owner:

CREATE USER MAPPING FOR peter SERVER oradb

OPTIONS (user 'orapeter', password 'oraretep');

-

Create a remote table link:

CREATE FOREIGN TABLE rif_data.oratab (

id integer OPTIONS (key 'true') NOT NULL,

text character varying(30),

floating double precision NOT NULL

) SERVER oradb OPTIONS (schema 'ORAUSER', table 'ORATAB');

- Test access:

SELECT FROM rif_data.oratab LIMIT 20;

- There is no need to grant access on the foreign table to specific users, the user mapping controls the access.

SQL Server with a remote Oracle database

- Requires the Oracle instant client (see Postgres);

- Download 64-bit Oracle Data Access Components (ODAC)

- Unzip the download and CD into its base, run

install.bat all c:\oracle odac

C:\>cd C:\Users\support\Downloads\ODAC122010Xcopy_x64

C:\Users\support\Downloads\ODAC122010Xcopy_x64>install.bat all c:\oracle odac

This installs in C:\oracle.

-

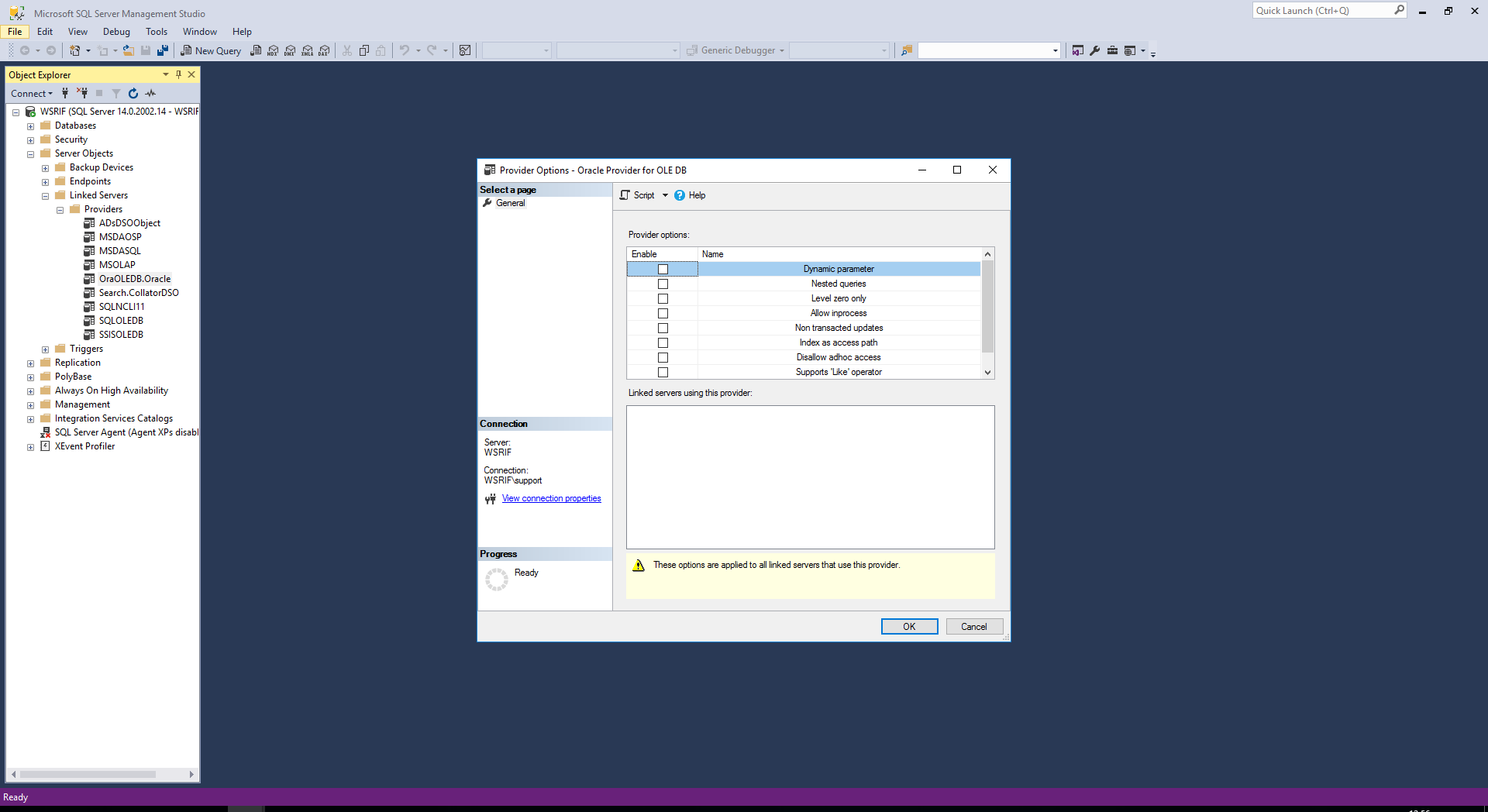

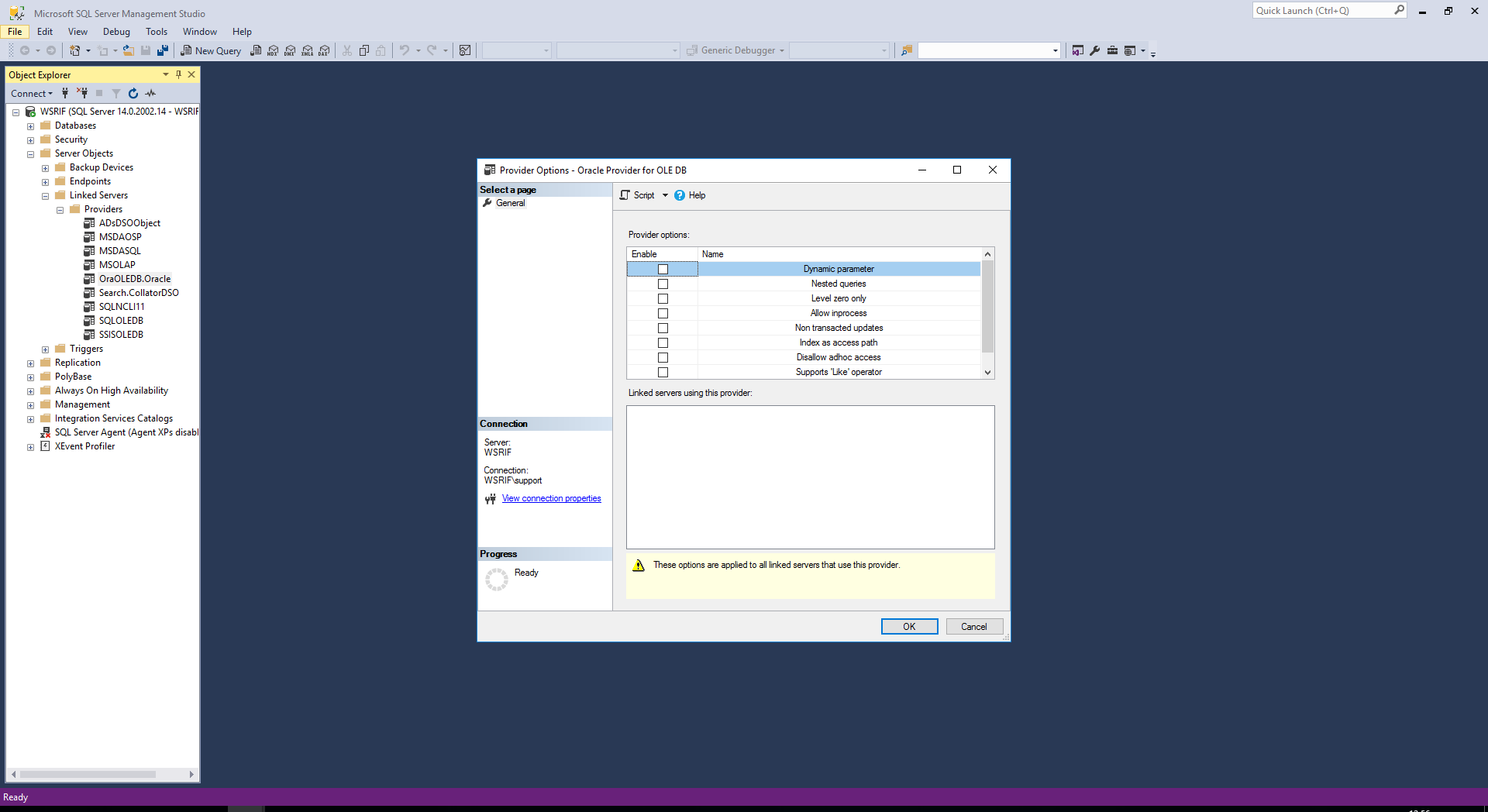

Check the Oracle OLEDB provider is picked up in SQL Server manager:

-

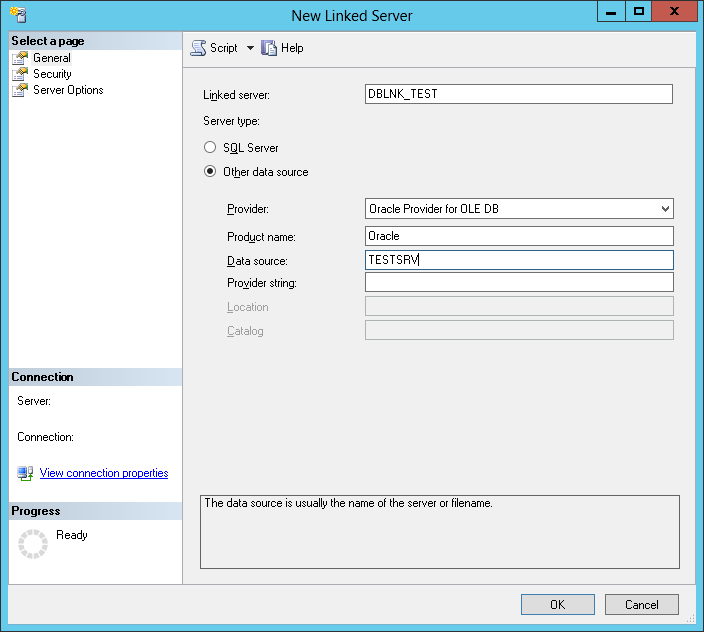

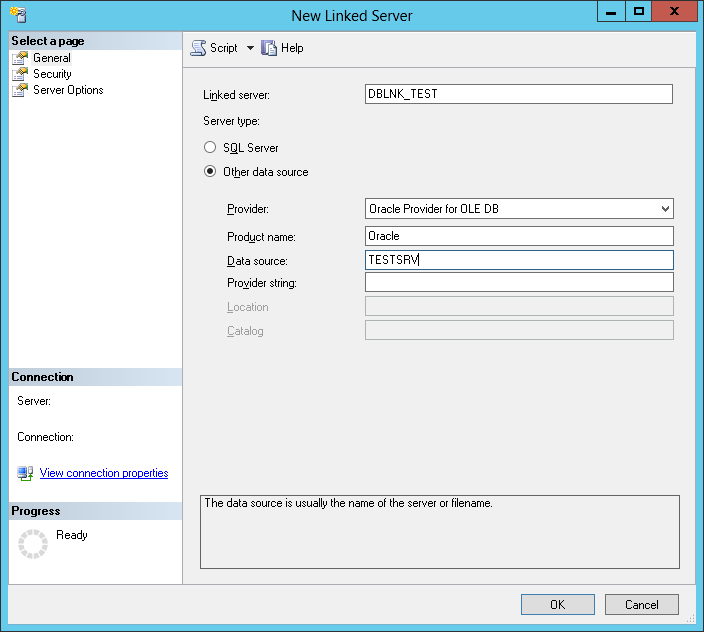

Create a remote link to the Oracle database. A schema account will be required:

https://www.sqlshack.com/link-sql-server-oracle-database/

- Create a VIEW to the remote abject in the rif_data schema:

CREATE VIEW rif_data.msqltab AS

SELECT * FROM {remote_db].[remote_schema].[remote_table_or_view];

- Grant access to local user:

GRANT SELECT ON rif_data.msqltab TO peter;

- Test access:

SELECT TOP 20 FROM rif_data.msqltab;

Postgres with a remote SQL Server database

This requires TDS foreign data wrapper and is not currently compiled for Windows.

SQL Server with a remote Postgres database

This would require PostgreSQL OLE DB Provider project and is no longer under active

development (last version 1.0.20 from April 2006. There is a commercial driver available PGNP OLEDB Providers for PostgreSQL, Greenplum and Redshift

for $498. The PostgreSQL Global Development Group do not endorse or recommend any products listed, and cannot vouch for the

quality or reliability of any of them; and neither does SAHSU!

This currently covers:

Auditing

Postgres

Basic statement logging can be provided by the standard logging facility with the configuration parameter log_statement = all.

Postgres has an extension pgAudit which provides much more auditing, however the Enterprise DB installer does not include Postgres

extensions (apart from PostGIS). EnterpiseDB Postgres has its own auditing subsystem (*edb_audit), but this is is paid for item. To use pgAudit the module must be compile from source

To configure Postgres server error reporting and logging set the following Postgres system parameters see

Postgres tuning for details on how to set parameters:

log_statement = all. The default is ‘none’;Set log_min_error_statement = error [default] or lower;log_error_verbosity = verbose;log_connections = on;log_disconnections = on;log_destinstion = stderr, eventlog, csvlog. Other choices are csvlog or syslog*

Parameters can be set in the postgresql.conf file or on the server command line. This is stored in the database cluster’s data directory, e.g. C:\Program Files\PostgreSQL\9.6\data. Beware, you can

move the data directory to a solid state disk, mine is: E:\Postgres\data! Check the startup parameters in the Windows services app for the “-D” flag:

"C:\Program Files\PostgreSQL\9.6\bin\pg_ctl.exe" runservice -N "postgresql-x64-9.6" -D "E:\Postgres\data" -w

If you are using CSV log files set:

logging_collector = on;log_filename = postgresql-%Y-%m-%d.log and log_rotation_age = 1440 (in minutes) to provide a consistent, predictable naming scheme for your log files. This lets you predict what the file name will be and know

when an individual log file is complete and therefore ready to be imported. The log filename is in strftime() format;log_rotation_size = 0 to disable size-based log rotation, as it makes the log file name difficult to predict;log_truncate_on_rotation = on to on so that old log data isn’t mixed with the new in the same file.

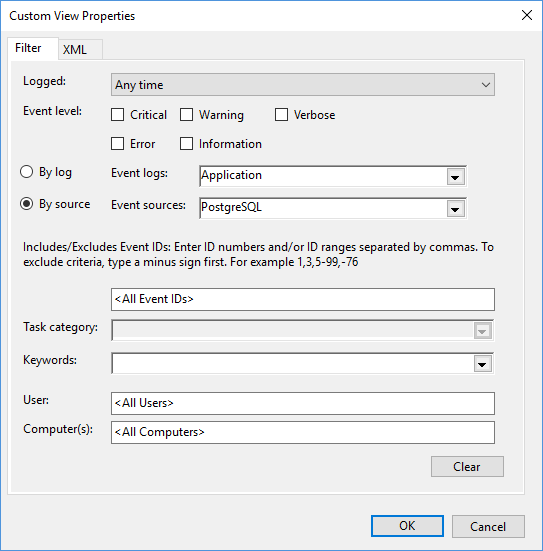

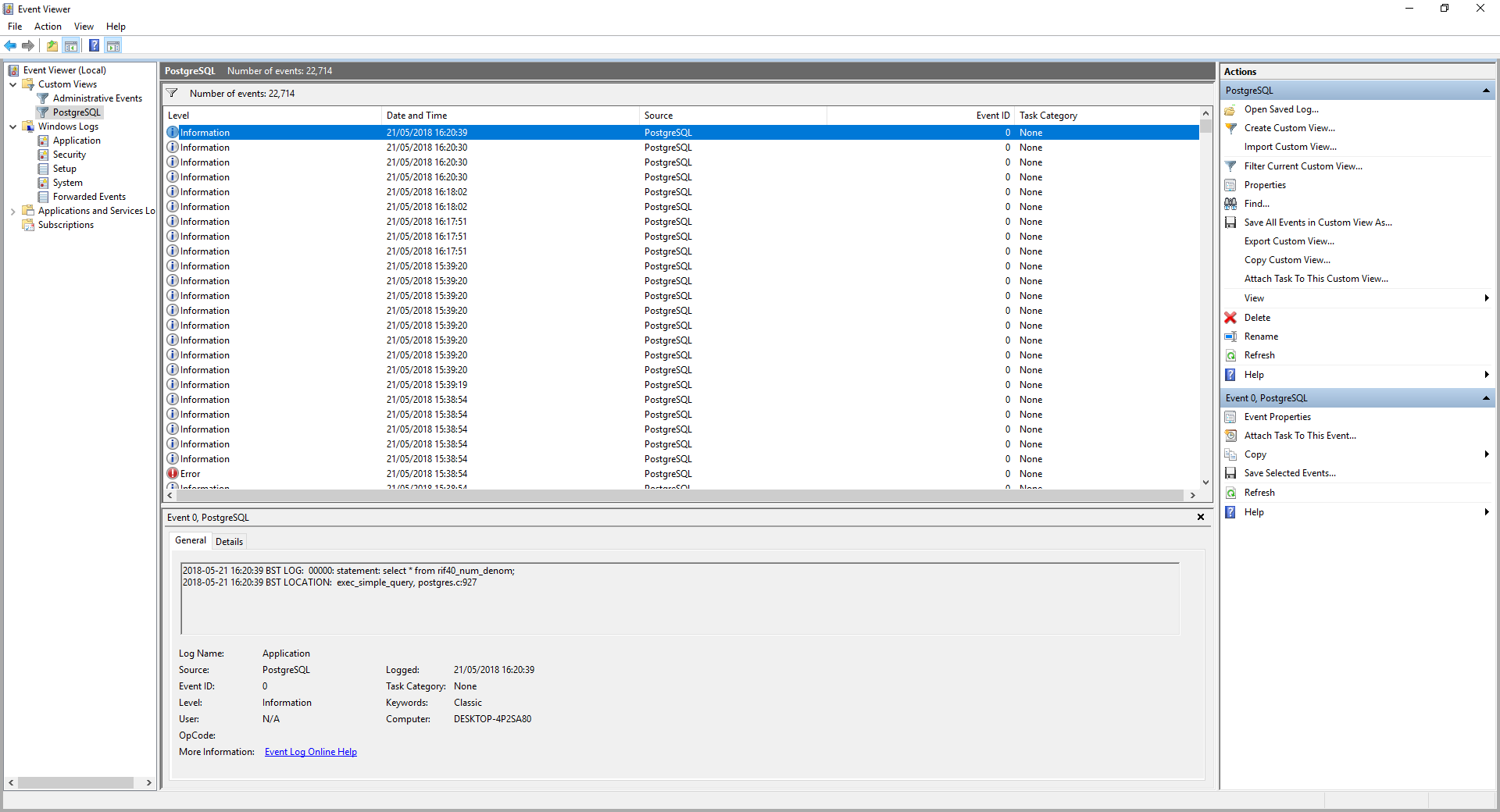

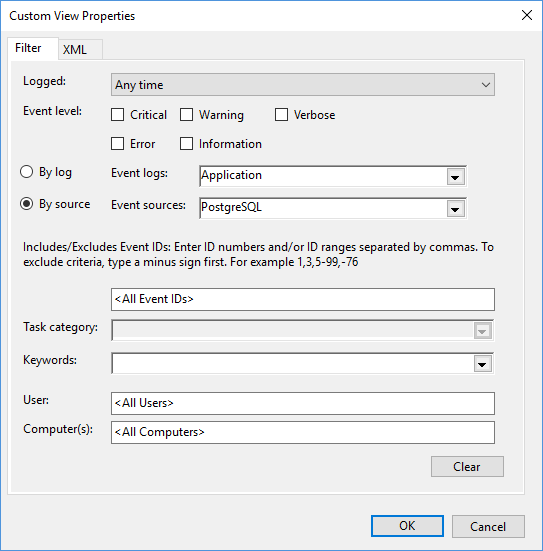

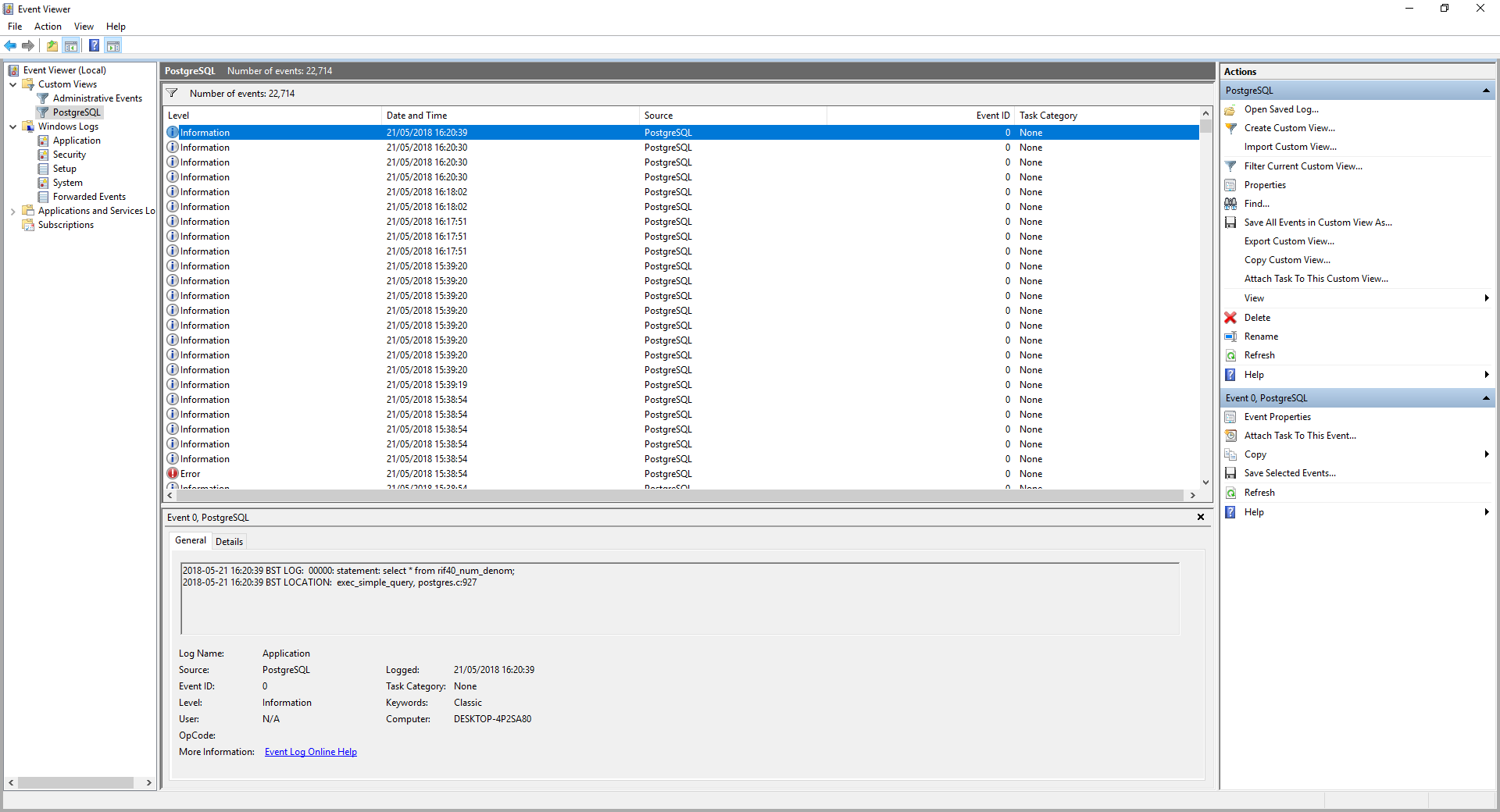

Create a Postgres event log custom view, create a custom event view in the Event Viewer.

An XML setup file postgres_event_log_custom_view.xml

is also provided:

<ViewerConfig>

<QueryConfig>

<QueryParams>

<Simple>

<Channel>Application</Channel>

<RelativeTimeInfo>0</RelativeTimeInfo>

<Source>PostgreSQL</Source>

<BySource>True</BySource>

</Simple>

</QueryParams>

<QueryNode>

<Name>PostgreSQL</Name>

<Description>Postgres DB</Description>

<QueryList>

<Query Id="0" Path="Application">

<Select Path="Application">*[System[Provider[@Name='PostgreSQL']]]</Select>

</Query>

</QueryList>

</QueryNode>

</QueryConfig>

</ViewerConfig>

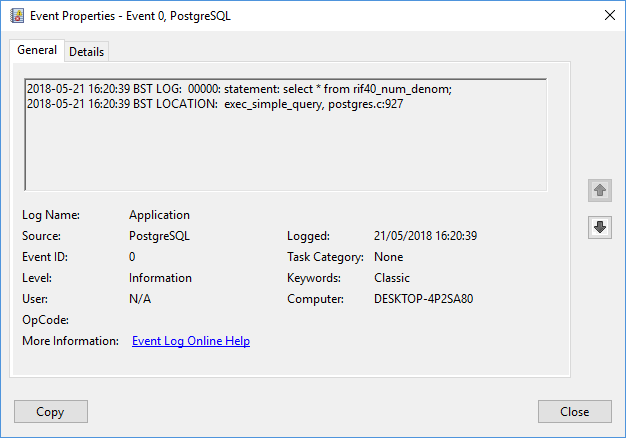

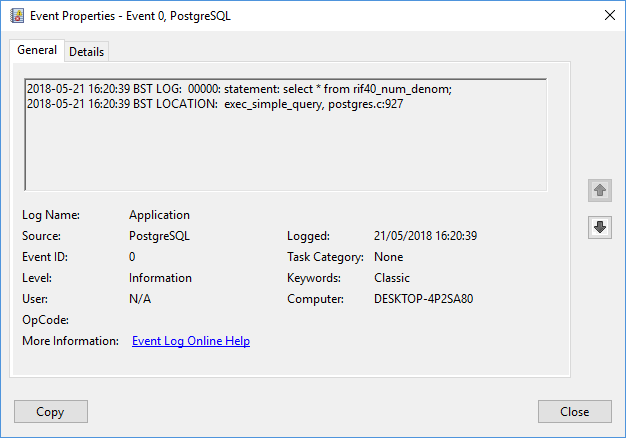

An example log file extract (postgresql-2018-05-21.log):

2018-05-21 16:20:39 BST LOG: 00000: statement: select * from rif40_num_denom;

2018-05-21 16:20:39 BST LOCATION: exec_simple_query, postgres.c:927

The equivalent CSV file entry (postgresql-2018-05-21.csv) is far more detailed:

2018-05-21 16:20:39.261 BST,"peter","sahsuland",9900,"::1:62022",5b02e3be.26ac,4,"",2018-05-21 16:20:30 BST,2/237,0,LOG,00000,"statement: select * from rif40_num_denom;",,,,,,,,"exec_simple_query, postgres.c:927","RIF (psql)"

2

By loading the CSV log in to a table it can then be queried:

CREATE TABLE postgres_log

(

log_time timestamp(3) with time zone,

user_name text,

database_name text,

process_id integer,

connection_from text,

session_id text,

session_line_num bigint,

command_tag text,

session_start_time timestamp with time zone,

virtual_transaction_id text,

transaction_id bigint,

error_severity text,

sql_state_code text,

message text,

detail text,

hint text,

internal_query text,

internal_query_pos integer,

context text,

query text,

query_pos integer,

location text,

application_name text,

PRIMARY KEY (session_id, session_line_num)

);

CREATE TABLE

\copy postgres_log FROM 'E:\Postgres\data\pg_log\postgresql-2018-05-21.csv' WITH csv;

COPY 25

sahsuland=> SELECT * FROM postgres_log WHERE user_name = USER AND message LIKE '%select * from rif40_num_denom;';

log_time | user_name | database_name | process_id | connection_from | session_id | session_line_num | command_tag | session_start_time | virtual_transaction_id | transaction_id | error_severity | sql_state_code | message | detail | hint | internal_query | internal_query_pos | context | query | query_pos | location | application_name

----------------------------+-----------+---------------+------------+-----------------+---------------+------------------+-------------+------------------------+------------------------+----------------+----------------+----------------+-------------------------------------------+--------+------+----------------+--------------------+---------+-------+-----------+-----------------------------------+------------------

2018-05-21 16:20:39.261+01 | peter | sahsuland | 9900 | ::1:62022 | 5b02e3be.26ac | 4 | | 2018-05-21 16:20:30+01 | 2/237 | 0 | LOG | 00000 | statement: select * from rif40_num_denom; | | | | | | | | exec_simple_query, postgres.c:927 | RIF (psql)

(1 row)

Formatted the CSV log entry is:

| Log field name |

Value |

| log_time |

2018-05-21 16:20:39.261+01 |

| user_name |

peter |

| database_name |

sahsuland |

| process_id |

9900 |

| connection_from |

::1:62022 |

| session_id |

5b02e3be.26ac |

| session_line_num |

4 |

| command_tag |

|

| session_start_time |

2018-05-21 16:20:30+01 |

| virtual_transaction_id |

2/237 |

| transaction_id |

0 |

| error_severity |

LOG |

| sql_state_code |

00000 |

| message |

statement: select * from rif40_num_denom; |

| detail |

|

| hint |

|

| internal_query |

|

| internal_query_pos |

|

| context |

|

| query |

|

| query_pos |

|

| location |

exec_simple_query, postgres.c:927 |

| application_name |

RIF (psql) |

The equivalent PostgreSQL Windows log entry entry is:

SQL Server

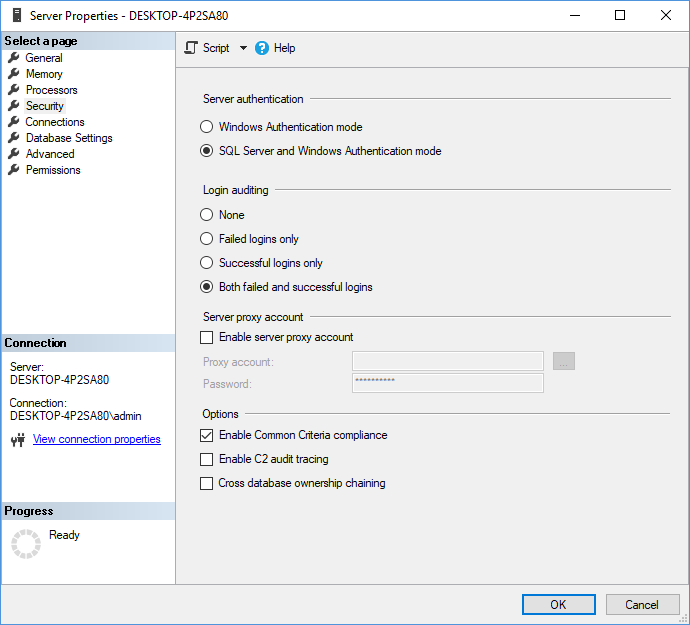

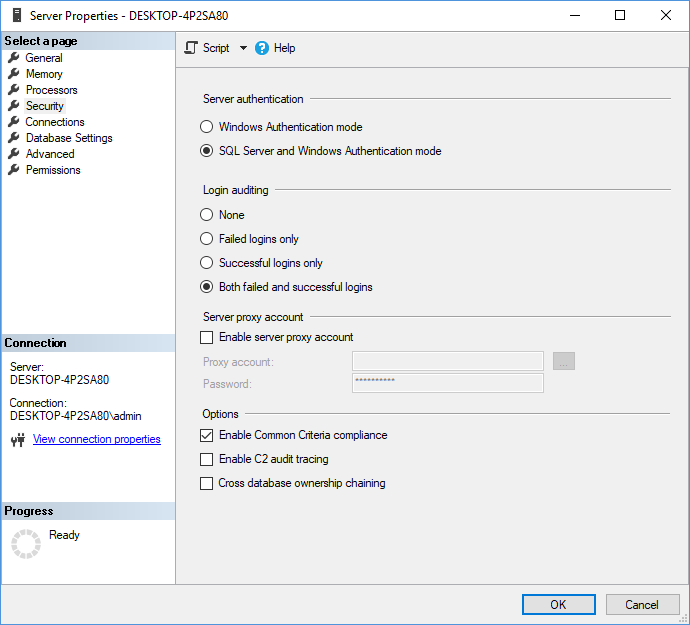

See:

To setup (Common criteria compliance:

- Use the SQL Server management studio server properties pane:

- Also check audit failed and successful logins;

- Restart SQL Server;

TO BE ADDED: auditing DDL and DML (without using triggers!)

Backup and recovery

As with all relational databases; cold backups are recommended as a baselines and should be carried out using your enterprise backup tools with the database down. Two further backup solutions are

suggested:

- Logical backups (recommended given the likely size of most RIF databses);

- Continuous archiving and point-in-time recovery (PITR) for more advanced sites;

Because of the fully transactional nature of both Postgres and SQL Server consistent logical backups can be run with users logged on, the database does not need to be put into a quiescent state.

Please bear in mind that continuous archiving and point-in-time recovery greatly expands the recovery options allowing for corruption repair and recovery from

object deletion incidents.

Replication is invariably very complex and is beyond the scope of this manual. It is recommended for very large sites with Postgres because of Postgres’ poor support for corruption

detection and repair.

Postgres

Logical Backups

Postgres logical backup and recovery uses pg_dump and pg_restore. pg_dump only dumps a single database. To backup global objects that are common to all databases in a cluster, such as roles and tablespaces,

use pg_dumpall.

Two basic formats: 1) a SQL script to recreate the database using psql and 2) a binary dump file to restore using pg_restore.

- SQL Script:

pg_dump -U postgres -w -F plain -v -C sahsuland > sahsuland.sql

- Binary dump file:

pg_dump -U postgres -w -F custom -v sahsuland > sahsuland.dump

Where the database name is sahsuland.

Flags:

- -U postgres:

- -F <format%gt;: dump format: plain (SQL), custom or directory (pg_restore);

- -w: do not prompt for a password;

- -v: be verbose;

To restore a custom or directory pg_dump file: pg_restore -d sahsuland -U postgres -v sahsuland.dump. This is the method uses to create the example database sahsuland

from the development database sahsuland_dev. See:

Continuous Archiving and Point-in-Time Recovery

Postgres supports continuous archiving and point-in-time recovery (PITR). See:

SQL Server

Logical Backups

SQL Server logical backup and restore are SQL commands entered using sqlcmd. See:

BACKUP DATABASE [sahsuland] TO DISK='C:\Users\Peter\Documents\GitHub\rapidInquiryFacility\rifDatabase\SQLserver\installation\..\production\sahsuland.bak'

WITH COPY_ONLY, INIT;

GO

Msg 4035, Level 0, State 1, Server PETER-PC\SAHSU, Line 6

Processed 42040 pages for database 'sahsuland_dev', file 'sahsuland_dev' on file 54.

Msg 4035, Level 0, State 1, Server PETER-PC\SAHSU, Line 6

Processed 2 pages for database 'sahsuland_dev', file 'sahsuland_dev_log' on file 54.

Msg 3014, Level 0, State 1, Server PETER-PC\SAHSU, Line 6

BACKUP DATABASE successfully processed 42042 pages in 3.940 seconds (83.363 MB/sec).

To restore a backup:

RESTORE DATABASE [sahsuland]

FROM DISK='C:\Users\Peter\Documents\GitHub\rapidInquiryFacility\rifDatabase\SQLserver\installation\..\production\sahsuland.bak'

WITH REPLACE;

Msg 4035, Level 0, State 1, Server PETER-PC\SAHSU, Line 1

Processed 45648 pages for database 'sahsuland', file 'sahsuland' on file 1.

Msg 4035, Level 0, State 1, Server PETER-PC\SAHSU, Line 1

Processed 14 pages for database 'sahsuland', file 'sahsuland_log' on file 1.

Msg 3014, Level 0, State 1, Server PETER-PC\SAHSU, Line 1

RESTORE DATABASE successfully processed 45662 pages in 5.130 seconds (69.538 MB/sec).

See the script rif40_production_creation.sql if you want to rename the database, its files or to move the files.

Continuous Archiving and Point-in-Time Recovery

This requires a transaction log backup, i.e. not the copy only version created in the previous section. You will need to do a full backup, followed by differential backups:

-- Create a full database backup first.

BACKUP DATABASE sahsuland

TO sahsuland

WITH INIT;

GO

-- Time elapses.

-- Create a differential database backup, appending the backup

-- to the backup device containing the full database backup.

BACKUP DATABASE sahsuland

TO sahsuland

WITH DIFFERENTIAL;

GO

Log backups require the database to be in the full recovery model, not the default simple recovery model. See:

Back Up a Transaction Log

For restoration see:

Restore a SQL Server Database to a Point in Time (Full Recovery Model

Patching

Alter scripts are numbered sequentially and have the same functionality in both ports, e.g. v4_0_alter_10.sql. Scripts are safe to run more than once.

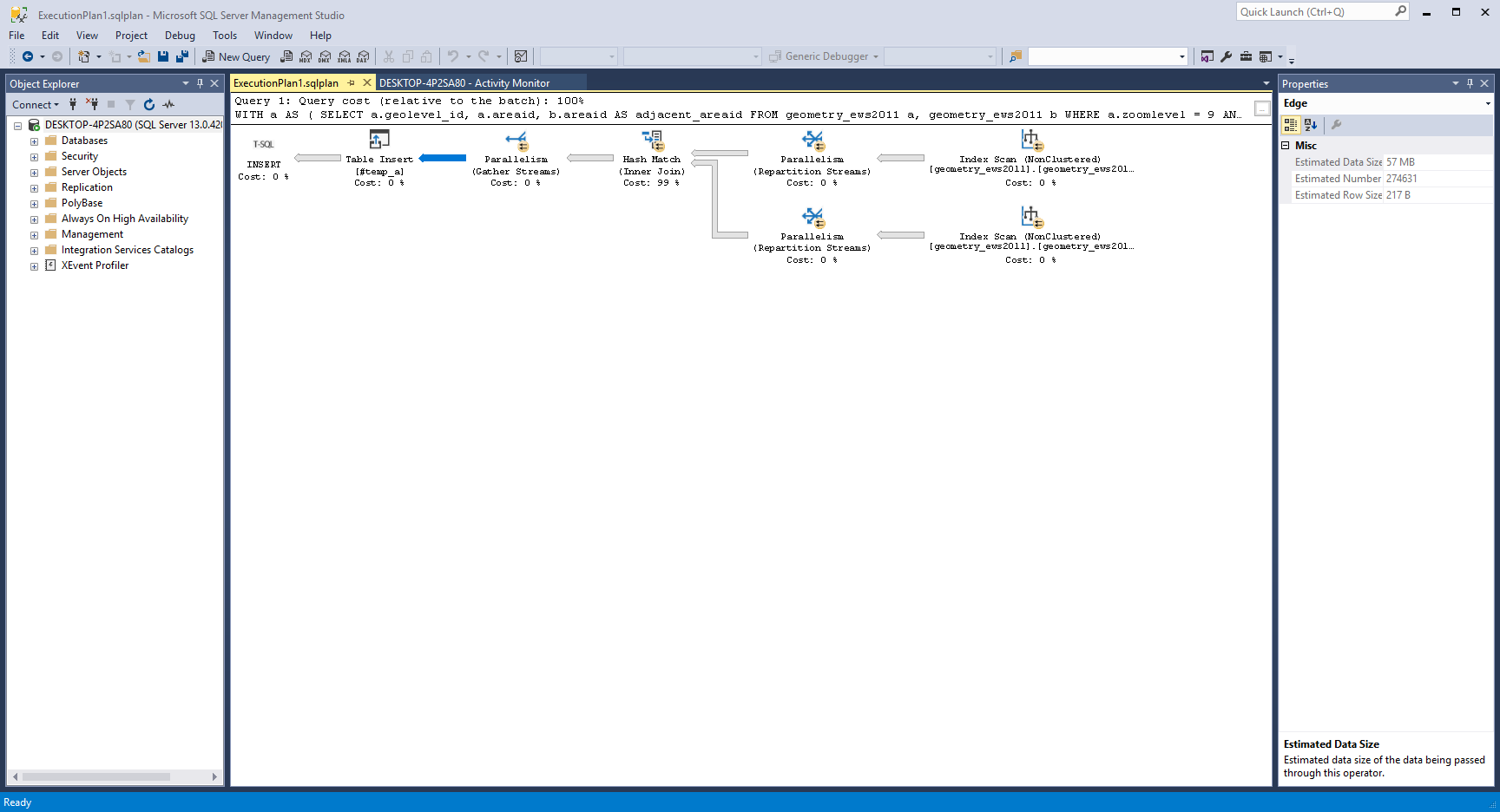

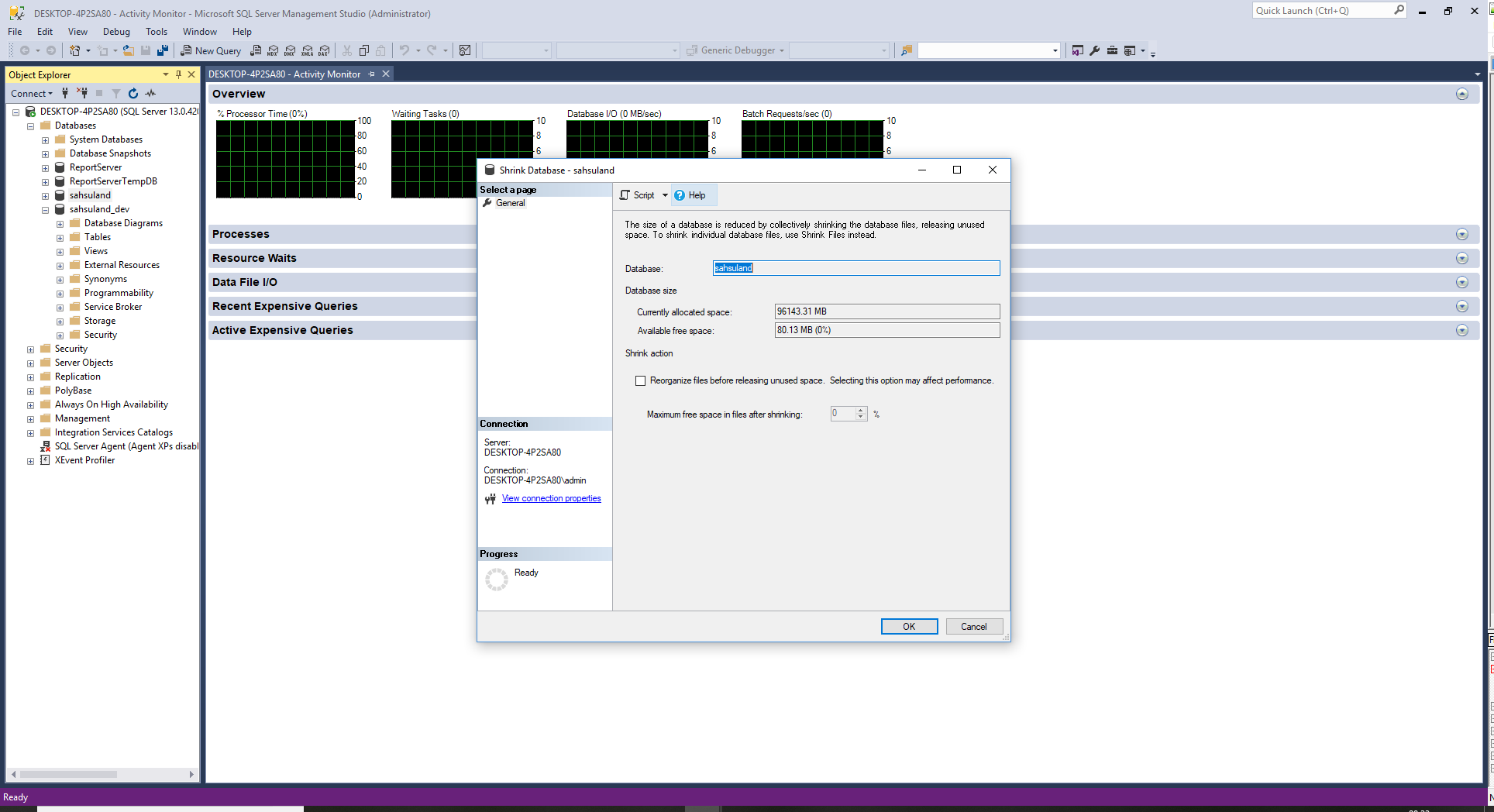

Pre-built databases are supplied patched up to date.